publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2024

- D-CAPTCHA++: A Study of Resilience of Deepfake CAPTCHA under Transferable Imperceptible Adversarial AttackHong-Hanh Nguyen-Le, Van-Tuan Tran, Dinh-Thuc Nguyen, and 1 more authorInternational Joint Conference on Neural Networks (IJCNN), 2024

The advancements in generative AI have enabled the improvement of audio synthesis models, including text-to-speech and voice conversion. This raises concerns about its potential misuse in social manipulation and political interference, as synthetic speech has become indistinguishable from natural human speech. Several speech-generation programs are utilized for malicious purposes, especially impersonating individuals through phone calls. Therefore, detecting fake audio is crucial to maintain social security and safeguard the integrity of information. Recent research has proposed a D-CAPTCHA system based on the challenge-response protocol to differentiate fake phone calls from real ones. In this work, we study the resilience of this system and introduce a more robust version, D-CAPTCHA++, to defend against fake calls. Specifically, we first expose the vulnerability of the D-CAPTCHA system under the transferable imperceptible adversarial attack. Secondly, we mitigate such vulnerability by improving the robustness of the system by using adversarial training in D-CAPTCHA deepfake detectors and task classifiers.

- Personalized Privacy-Preserving Framework for Cross-Silo Federated LearningVan-Tuan Tran, Huy-Hieu Pham, and Kok-Seng WongIEEE Transactions on Emerging Topics in Computing, 2024

Federated learning (FL) is recently surging as a promising decentralized deep learning (DL) framework that enables DL-based approaches trained collaboratively across clients without sharing private data. However, in the context of the central party being active and dishonest, the data of individual clients might be perfectly reconstructed, leading to the high possibility of sensitive information being leaked. Moreover, FL also suffers from the nonindependent and identically distributed (non-IID) data among clients, resulting in the degradation in the inference performance on local clients’ data. In this paper, we propose a novel framework, namely Personalized Privacy-Preserving Federated Learning (PPPFL), with a concentration on cross-silo FL to overcome these challenges. Specifically, we introduce a stabilized variant of the Model-Agnostic Meta-Learning (MAML) algorithm to collaboratively train a global initialization from clients’ synthetic data generated by Differential Private Generative Adversarial Networks (DP-GANs). After reaching convergence, the global initialization will be locally adapted by the clients to their private data. Through extensive experiments, we empirically show that our proposed framework outperforms multiple FL baselines on different datasets, including MNIST, Fashion-MNIST, CIFAR-10, and CIFAR-100.

2023

-

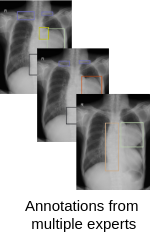

Learning from Multiple Expert Annotators for Enhancing Anomaly Detection in Medical Image AnalysisKhiem H. Le, Tuan V. Tran, Hieu H. Pham, and 3 more authorsIEEE Access, 2023

Learning from Multiple Expert Annotators for Enhancing Anomaly Detection in Medical Image AnalysisKhiem H. Le, Tuan V. Tran, Hieu H. Pham, and 3 more authorsIEEE Access, 2023Recent years have experienced phenomenal growth in computer-aided diagnosis systems based on machine learning algorithms for anomaly detection tasks in the medical image domain. However, the performance of these algorithms greatly depends on the quality of labels since the subjectivity of a single annotator might decline the certainty of medical image datasets. In order to alleviate this problem, aggregating labels from multiple radiologists with different levels of expertise has been established. In particular, under the reliance on their own biases and proficiency levels, different qualified experts provide their estimations of the "true" bounding boxes representing the anomaly observations. Learning from these nonstandard labels exerts negative effects on the performance of machine learning networks. In this paper, we propose a simple yet effective approach for the enhancement of neural networks’ efficiency in abnormal detection tasks by estimating the actually hidden labels from multiple ones. A re-weighted loss function is also used to improve the detection capacity of the networks. We conduct an extensive experimental evaluation of our proposed approach on both simulated and real-world medical imaging datasets, MED-MNIST and VinDr-CXR. The experimental results show that our approach is able to capture the reliability of different annotators and outperform relevant baselines that do not consider the disagreements among annotators.